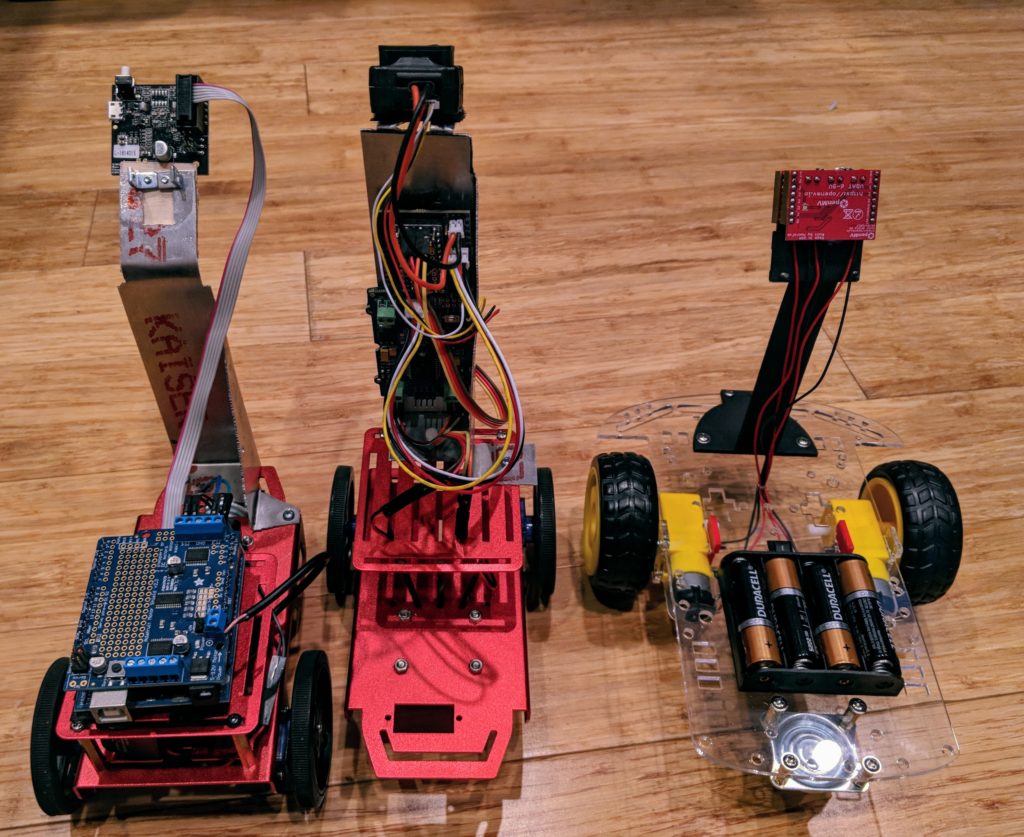

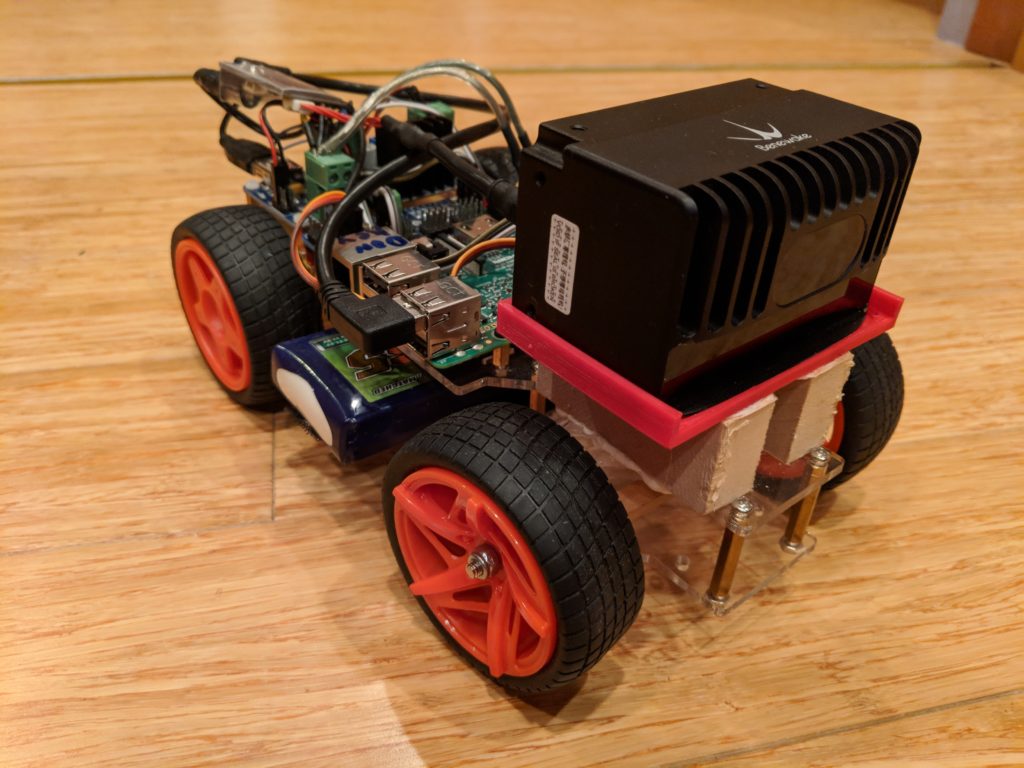

Updated Minimal Viable Racer using latest OpenMV linear regression code

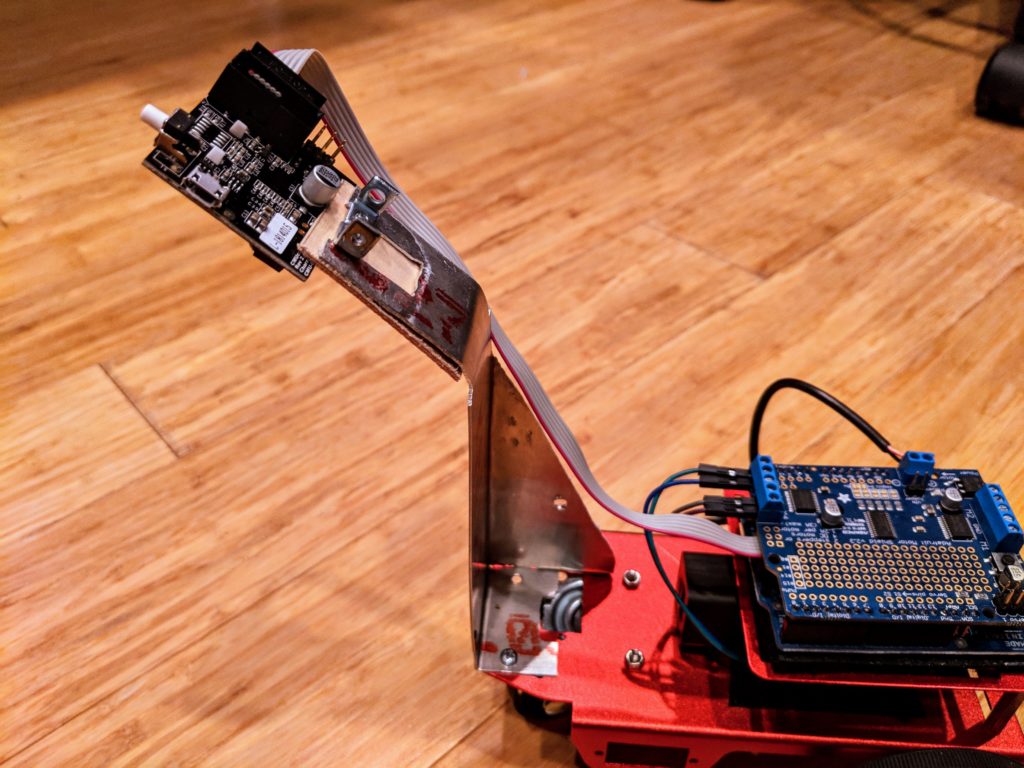

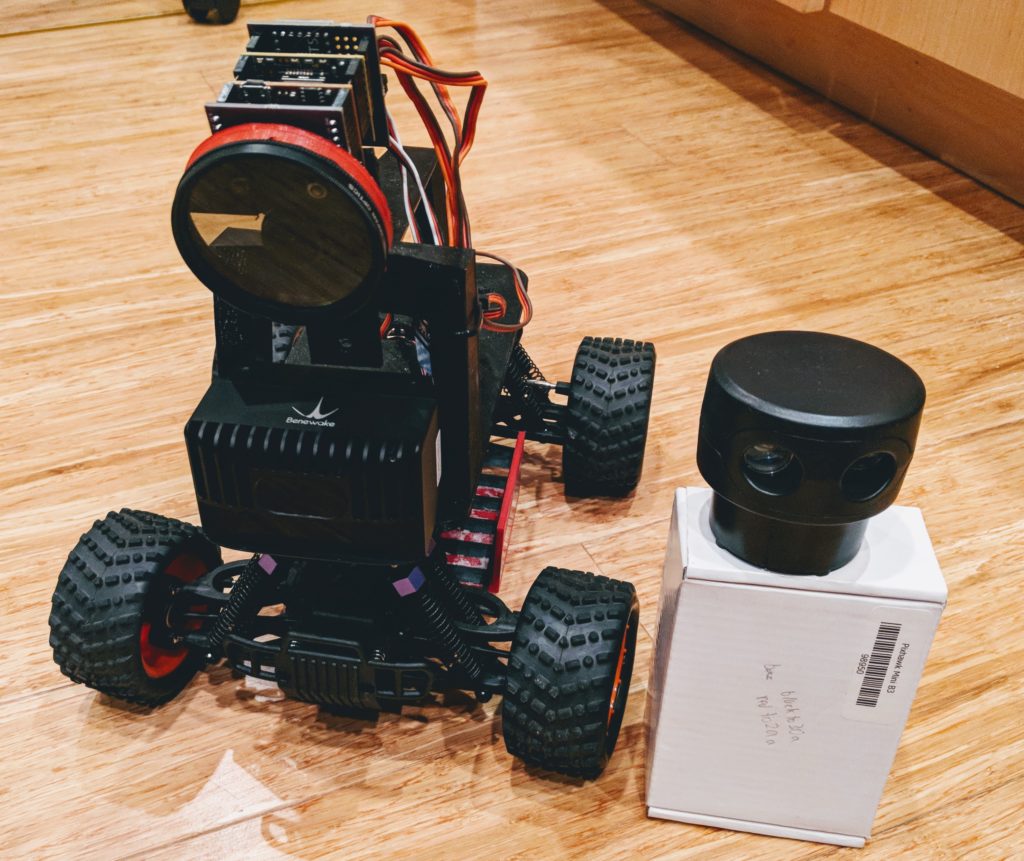

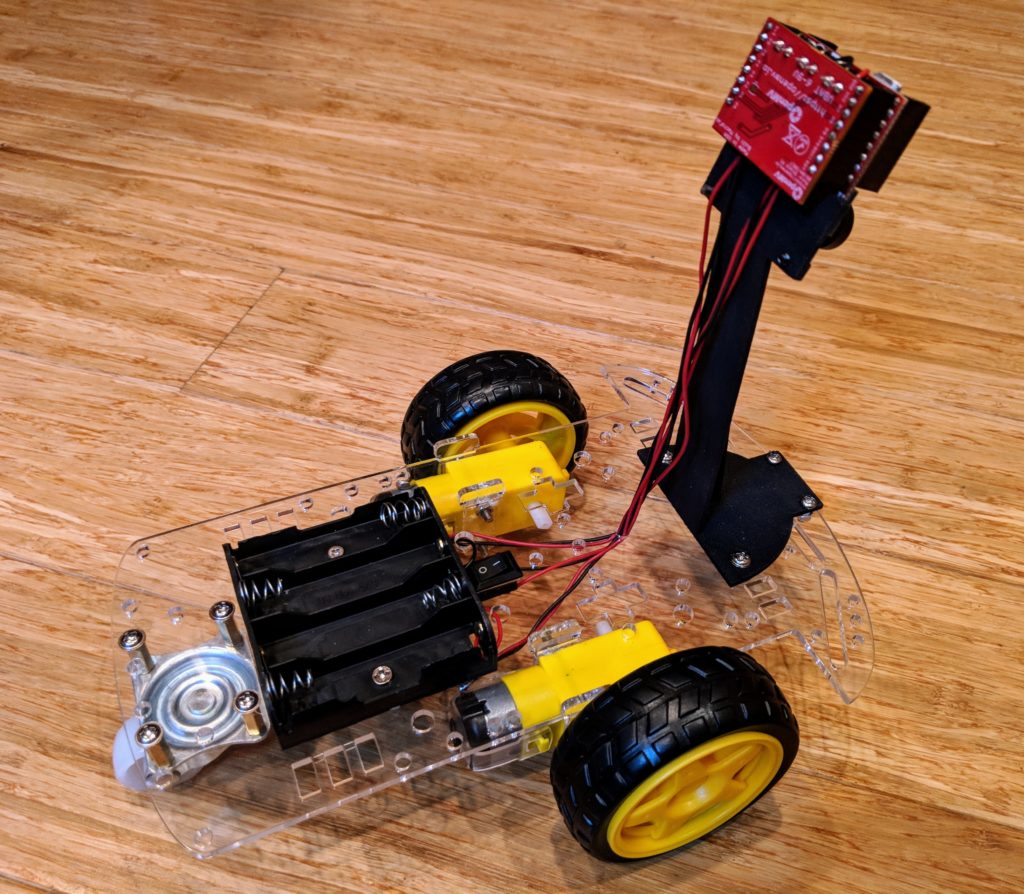

OpenMV continues to be the best and easiest way to get started with DIY Robocars and with the forthcoming H7 version (twice the speed and memory) it’s increasingly capable, too.

Our entry-level code for the “Minimum Viable Racer” uses a basic computer vision technique known as “blob tracking” and treats track lines of a given color as rectangles with a center point, which tells the car how to steer to stay on the line. This is easy to code but is a pretty blunt instrument, since the rectangles cover a large area of track and if that includes a curve the center point is really very approximate.

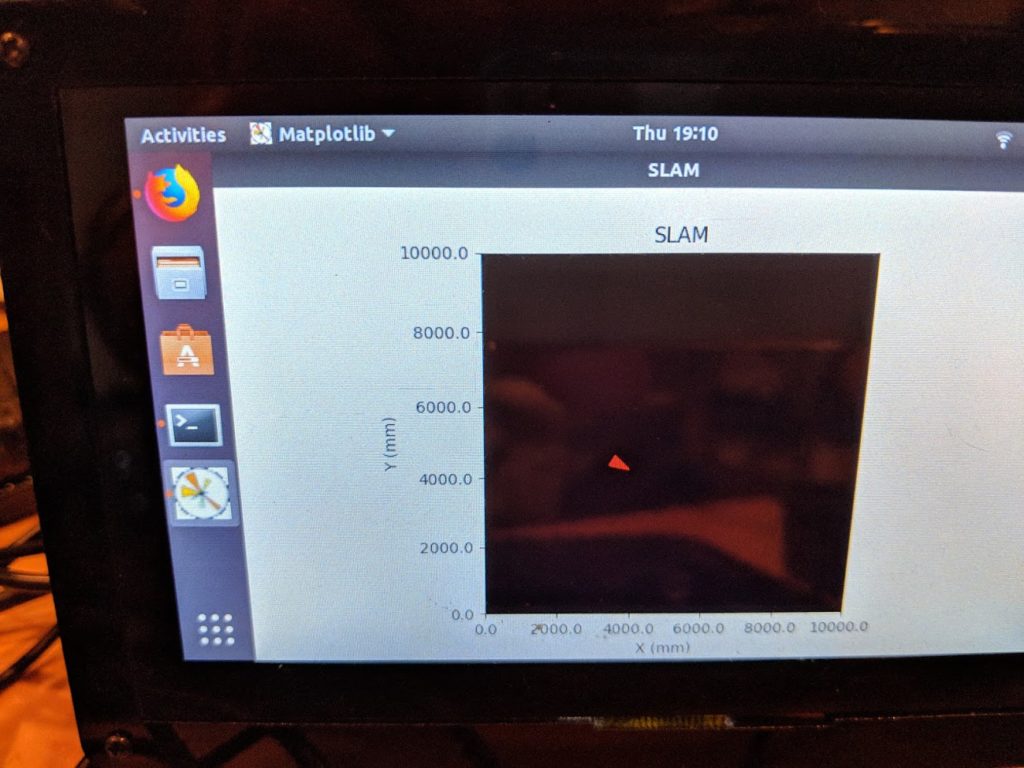

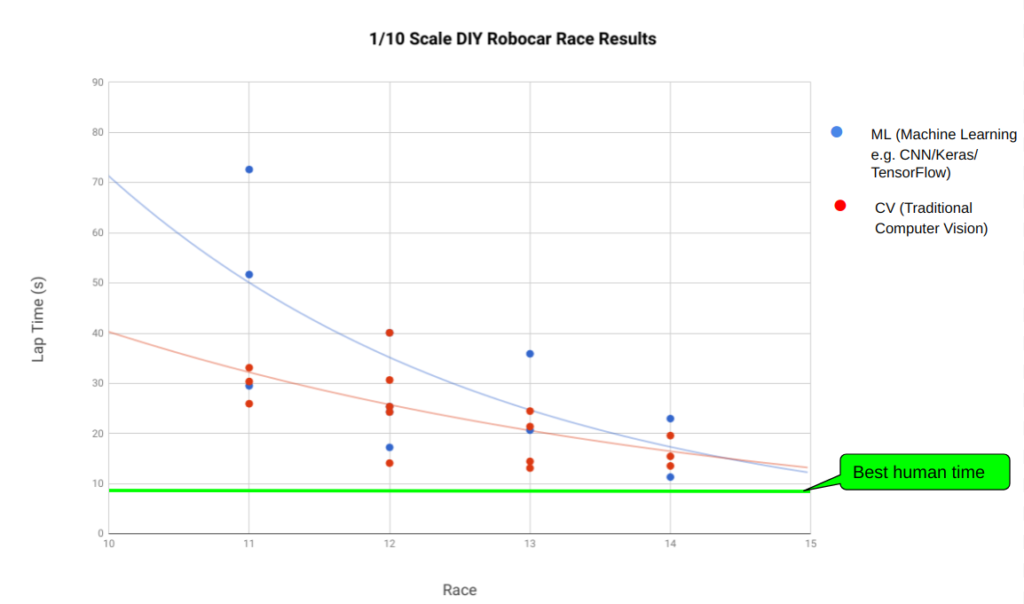

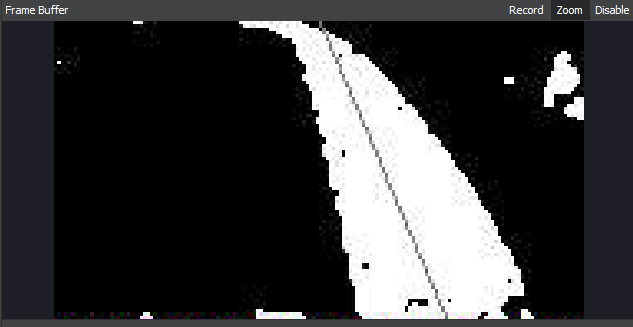

A more sophisticated computer vision approach is to do linear regression on all the detected points of the track line, which provides a more reliable “racing line” that ensures that the car is staying closer to the actual line on the road (shown below). This is the techniques used by OpenMV-based Donkeycar, which has reliably placed in the top five in DIY Robocar races.

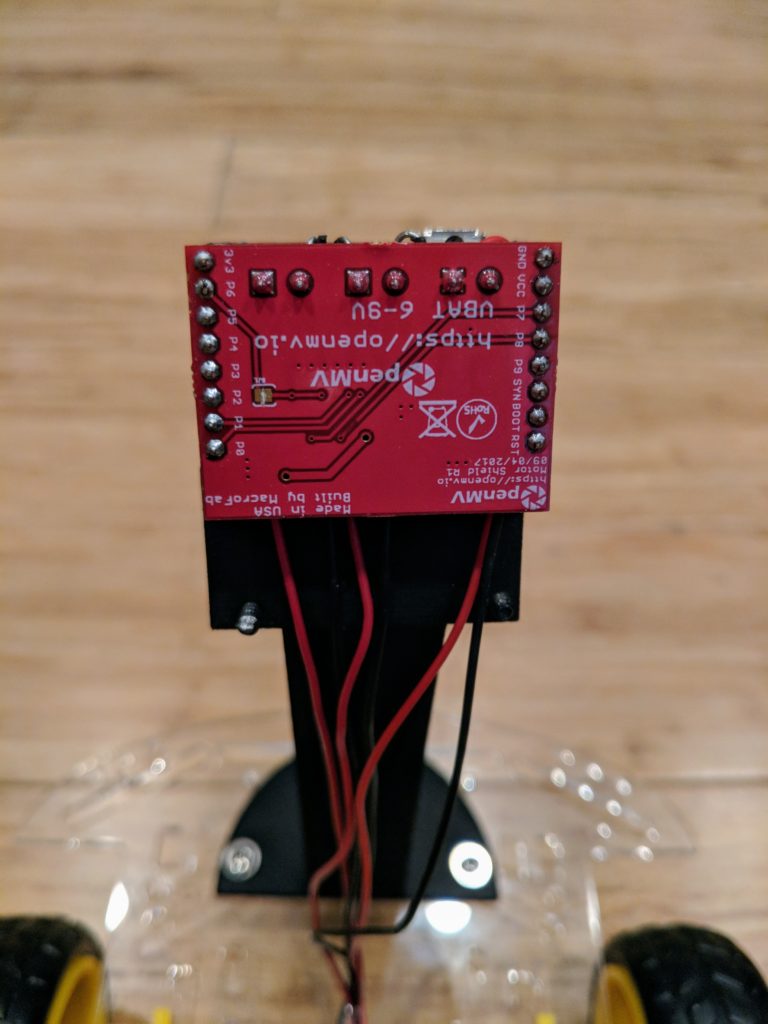

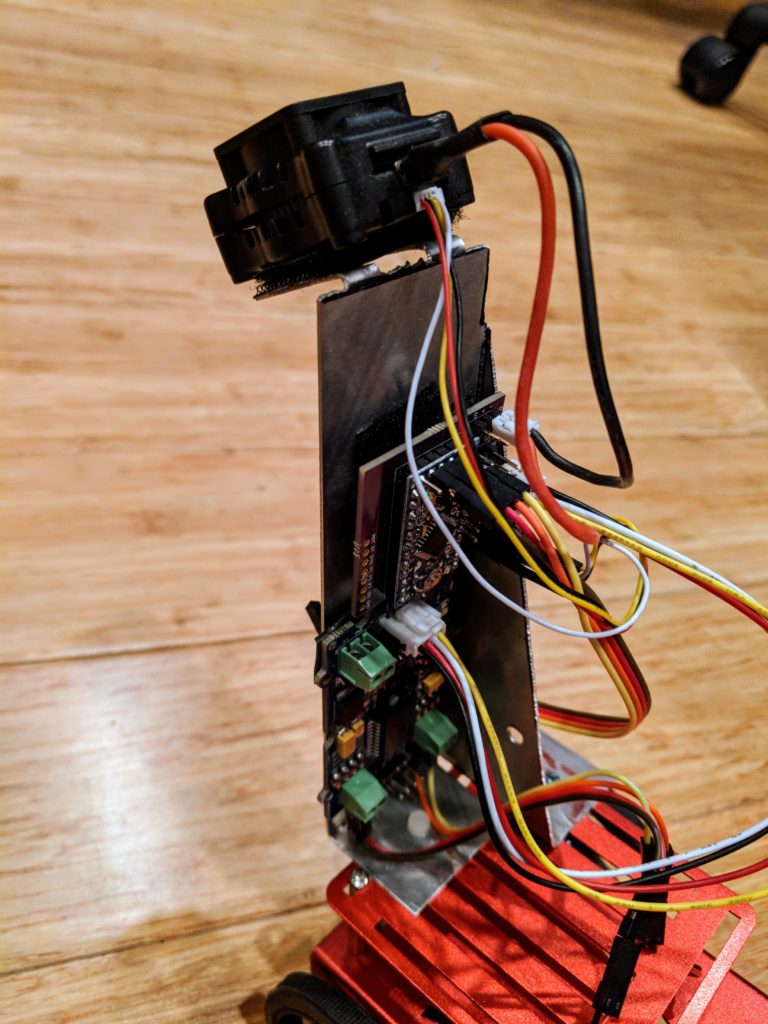

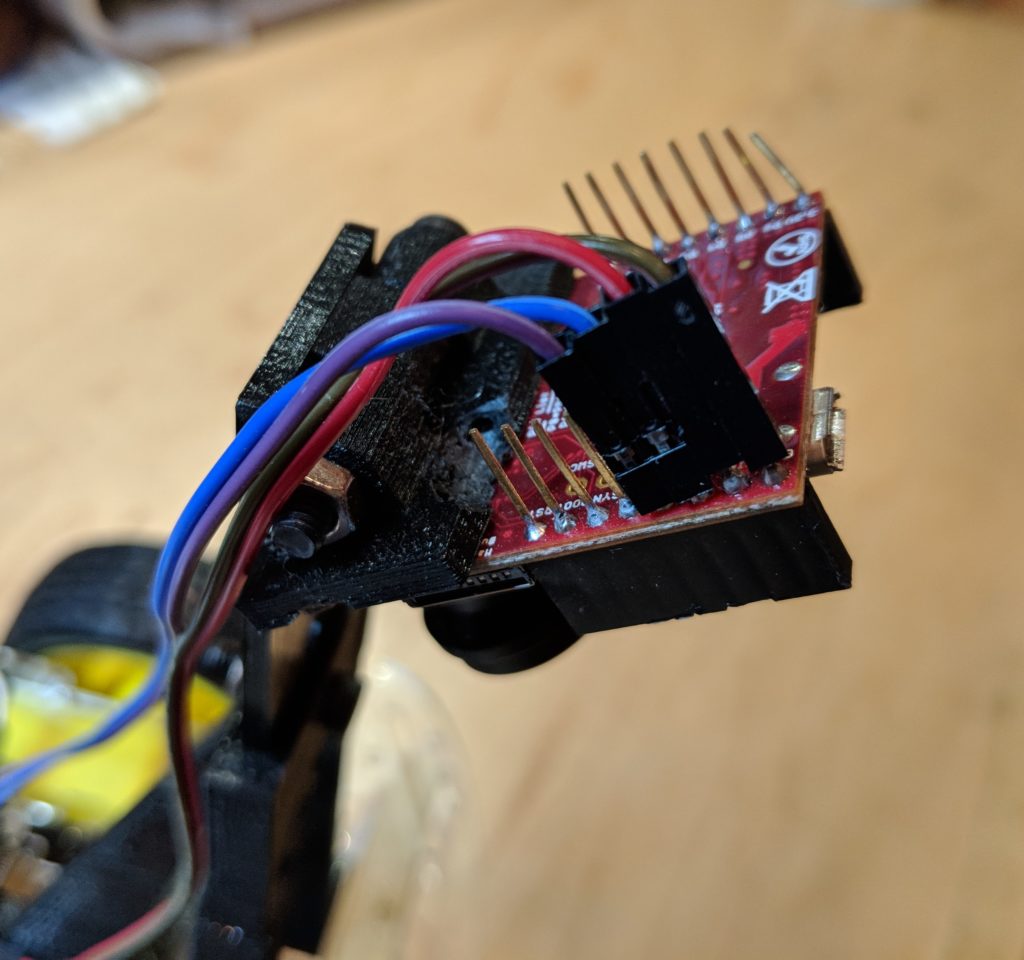

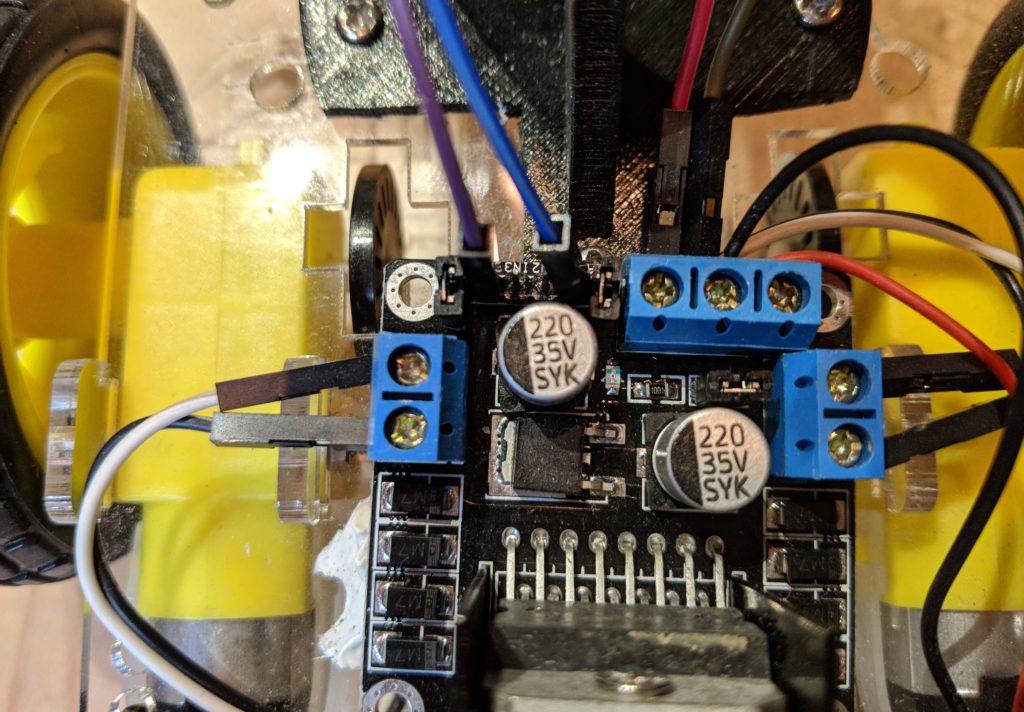

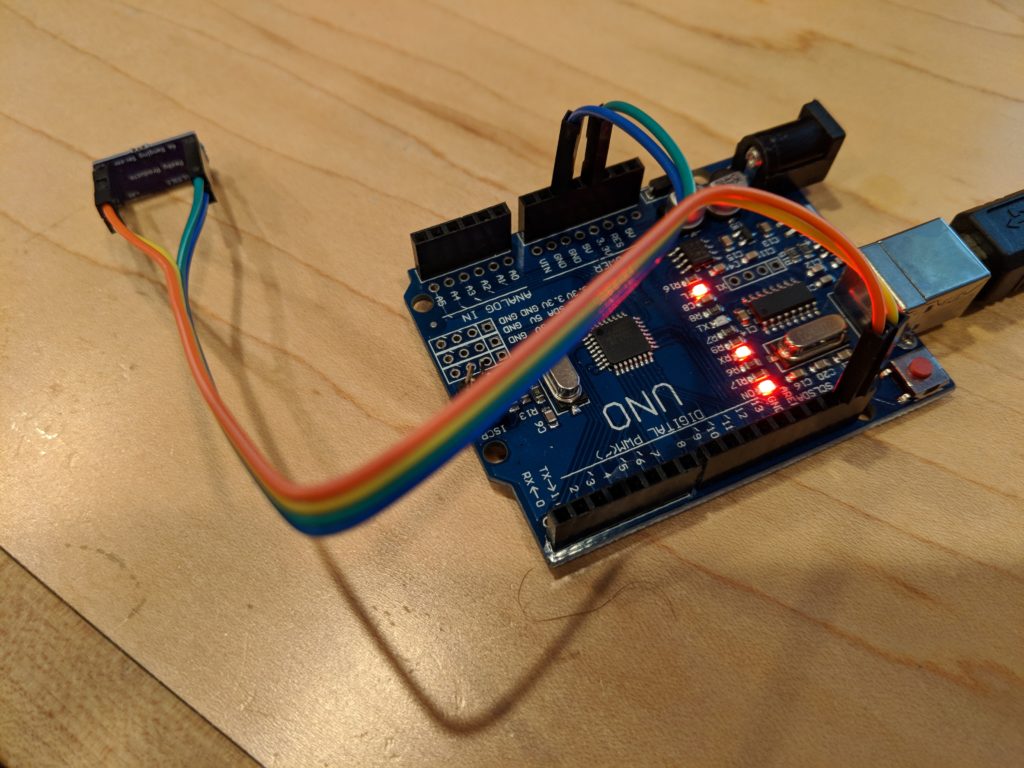

I’ve now created a version of that code that runs on the Minimum Viable Racer, too, using the stock OpenMV Motor Shield. No changes to the hardware are needed.

Get the code here

Notes:

It defaults to following a yellow line. To tell it to follow a different color, change this line to the color thresholds that works for your line, using the IDE’s Tools/Machine Vision/Threshold Editor

- Ensure “BINARY_VIEW = False”, so you can see the actual colors of the track and not just the black-and-white results. You can switch this to “True” when you’re done if you want to see how well it’s working, which will generate an image like the above.

- Change “COLOR_THRESHOLDS = [( 94, 100, -27, 1, 20, 127)]” to the correct thresholds for the colors of the line you want to track.

These are some other things you can tweak:

cruise_speed = 50 steering_direction = 1 # use this to reverse the steering if your car goes in the wrong direction steering_gain = 1.0 # calibration for your car's steering sensitivity steering_center = 0 # set to your car's steering center point